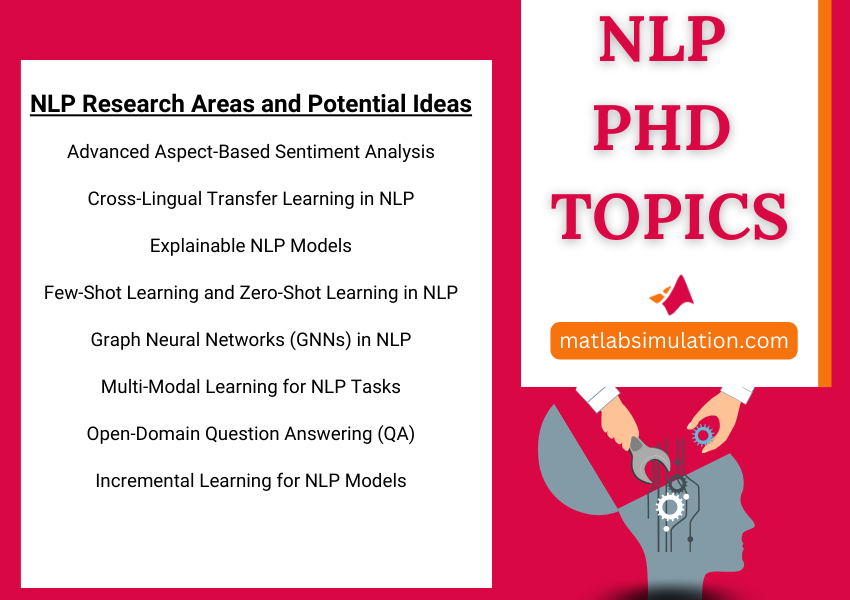

In the modern environment, NLP (Natural Language Processing) is widely deployed in areas like language translation, text analytics, GNNs and multimodal learning, due to its effective potential. For your PhD research, some of the hopeful and noteworthy research areas and probable concepts are recommended by us that are accompanied with key papers and addressed problems:

- Advanced Aspect-Based Sentiment Analysis (ABSA)

- Reviews:

- From the outset of lexicon-oriented frameworks to latest transformer-based ABSA models, analyzes the case studies.

- Main Papers: A Unified ABSA Framework Based on Pre-Trained Transformers (Sun et al., 2019), Attention-Based LSTM for Aspect-Level Sentiment Classification (Wang et al., 2016).

- Issues:

- Aspect Detection: In the process of identifying unexpressed perspectives in surveys seems complicated.

- Sentiment Classification: It is crucial to manage domain-specific sentiment impacts.

- Research Concepts:

- To identify hidden factors, create a multi-task model which integrates aspect identification and categorization with diverse learning.

- For ABSA (Advanced Aspect-Based Sentiment Analysis) in minimal-resource languages, investigate zero-shot and few-shot learning methods.

- Cross-Lingual Transfer Learning in NLP

- Reviews:

- Based on cross-lingual transfer models such as T5, XLM-R and mBERT conduct an extensive overview.

- Main Papers: XLM-R: Cross-lingual Pre-training for Many Languages (Conneau et al., 2020), mBERT: A Deep Bidirectional Transformer Model for Zero-Shot Cross-Lingual Transfer (Devlin et al., 2019).

- Issues:

- Resource Scarcity: Regarding several languages, there is a necessity of training data.

- Translation Bias: The performance of models is highly influenced by translational developments.

- Research Concepts:

- Across diverse languages, use pre-trained models to design domain adaptation algorithms for certain NLP programs.

- In order to improve the adjustment process, exhibit meta-learning techniques.

- Explainable NLP Models

- Reviews:

- For NLP models such as attention-oriented visualizations, LIME and SHAP, examine the modern explainability techniques.

- Main Papers: Attention Is Not Explanation (Jain et al., 2019), LIME: Local Interpretable Model-Agnostic Explanations (Ribeiro et al., 2016).

- Issues:

- Interpretability: Intelligibility is insufficient in transformer models like BERT.

- Bias Mitigation: Considering the model anticipations, it is difficult to interpret the involved unfair elements.

- Research Concepts:

- Integrate attention visualization and hypothetical analysis to model innovative interpretable techniques.

- In NLP models, detect and mitigate unfairness by creating a model consistently.

- Few-Shot Learning and Zero-Shot Learning in NLP

- Reviews:

- Regarding the zero-shot and few-shot learning models such as T5, Prototypical Networks and GPT-3, propose a detailed summary.

- Main Papers: T5: Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer (Raffel et al., 2020), GPT-3: Language Models are Few-Shot Learners” (Brown et al., 2020).

- Issues:

- Few-Shot Generalization: As reflecting on few-shot applications, it might be complex for generalization.

- Task-Specific Prompts: For various programs, it is significant to develop perfect prompts.

- Research Concepts:

- Utilize reinforcement learning for accurate prompt detection by modeling novel prompt engineering techniques.

- Improve few-shot adaptation over programs through synthesizing transfer learning and meta-learning methods.

- Graph Neural Networks (GNNs) in NLP

- Reviews:

- Specifically for NLP programs such as relationship extraction, knowledge graph completion and entity linking, explore the deployment of GNNs (Graph Neural Networks).

- Main Papers: Heterogeneous Graph Attention Networks (Wang et al., 2019), Graph Convolutional Networks for Text Classification (Yao et al., 2019).

- Issues:

- Graph Construction: It results in issues while developing superior text graphs.

- Graph Alignment: For integrated analysis, organize graphs from various sources in an effective manner.

- Research Concepts:

- To enhance relationship extraction and coordinate with multi-source textual graphs, create a graph fusion model.

- For open-domain question answering, generate a collaborative model which integrates GNNs with attention mechanisms.

- Multi-Modal Learning for NLP Tasks

- Reviews:

- Emphasize on synthesizing audio, text and visual properties in multi-modal learning by carrying out extensive research.

- Main Papers: LXMERT: Learning Cross-Modality Encoder Representations from Transformers (Tan et al., 2019), VisualBERT: A Simple and Performant Baseline for Vision and Language (Li et al., 2019).

- Issues:

- Feature Alignment: From various configurations, it is important to synchronize characteristics.

- Cross-Modal Interaction: Among audio, image and text, there is a necessity of efficient cross-modal reasoning.

- Research Concepts:

- To enhance multimodal sentiment analysis, develop a cross-modal attention mechanism.

- From textual as well as visual properties, interpret coordinated refinements by designing a generative model.

- Open-Domain Question Answering (QA)

- Reviews:

- Encompassing conventional IR-driven techniques to latest RAG frameworks provide an outline on open-domain QA (Question Answering).

- Main Papers: RAG: Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks (Lewis et al., 2020), DrQA: Reading Wikipedia to Answer Open-Domain Questions (Chen et al., 2017).

- Issues:

- Knowledge Base Construction: It is very essential to configure and keep up with current knowledge bases.

- Long-Range Reasoning: This demands extensive reasoning while managing several field questions.

- Research Concepts:

- On the basis of current data, formulate an effective knowledge retrieval system which upgrades automatically.

- For advanced question analysis, synthesize multi-hop reasoning with attention-based mechanisms.

- Bias and Fairness in NLP

- Reviews:

- Regarding the word embeddings and NLP frameworks, perform a study on identification and reduction of unfair elements.

- Main Papers: Gender and Racial Bias in BERT Models (Zhao et al., 2019), Man is to Computer Programmer as Woman is to Homemaker? (Bolukbasi et al., 2016).

- Issues:

- Unconscious Bias: Considering biases that are integrated in pre-trained embeddings.

- Systematic Fairness: Beyond several population groups, it is important to assure fair determinants.

- Research Concepts:

- Particularly for transformer-based models, build an effective adversarial debiasing model.

- Examine intersectional analysis by designing a systematic fairness assessment metrics.

- Incremental Learning for NLP Models

- Reviews:

- Depending on NLP, offer a summary of incremental and continual learning techniques.

- Main Papers: Online Continual Learning with Maximally Interfered Retrieval (Farquhar et al., 2018), Elastic Weight Consolidation (Kirkpatrick et al., 2017).

- Issues:

- Catastrophic Forgetting: After interpreting novel programs, it results in absence of previous task knowledge.

- Efficient Memory Management: It requires a balance between novel data and memory necessities.

- Research Concepts:

- For consistent learning in NLP tasks, exhibit a memory-augmented network.

- In transformer-based models, reduce forgetting by creating regularization techniques.

- Emergent Communication in NLP Models

- Reviews:

- On the subject of developmental linguistics and emergent communication in artificial agents, analyze the related research papers.

- Main Papers: Compositional Language Emerges in Multi-Agent Learning (Lowe et al., 2019), Emergent Communication through Negotiation (Cao et al., 2018).

- Issues:

- Symbol Grounding: Hidden symbols are difficult to map with actions or real-world objects.

- Compositionality: Intelligible and integrative communication protocol has to be generated.

- Research Concepts:

- For upcoming languages with configuration structures, create a reinforcement learning model.

- As regards practical task completion, design a negotiation-based agent communication.

As a researcher in Natural Language Processing NLP or Computational Linguistics CL which programming language should the person be fluent expert with?

To attain an impactful research, you have to be expert in the proceeding programming languages and accompanied tools as being an explorer in NLP (Natural Language Processing) and CL (Computational linguistics) area:

- Python

- Relevance: Because of its vibrant community, synthesization with machine learning models and extensive environment, Python has become a most prevalent programming language among users.

- Fundamental Libraries and tools:

- NLTK (Natural Language Toolkit): For simple NLP programs, it includes a built-in library.

- SpaCy: To attain rapid and effective text processing, this spaCy involves well-built and durable NLP libraries.

- Transformers (by Hugging Face): It incorporates advanced NLP models such as GPT-3 and BERT.

- Scikit-learn: Specifically for conventional machine learning techniques and feature extraction, it comprises a significant ML library.

- Gensim: Topic modeling and word embedding.

- Flair: For the purpose of sequence tagging and text classification, flair contains a user-friendly NLP model.

- Pytorch / TensorFlow: Deep learning models.

- R

- Relevance: R is typically deployed in language review and it is beneficial for visualization and statistical analysis.

- Fundamental Libraries and tools:

- Tm (Text Mining): It encompasses text mining (Tm) packages.

- Quanteda: Enhanced visualization and text analysis.

- Tidytext: For text mining, it includes scrubbing tools.

- text2vec: This text2vec tool provides the best performance in text mining and modeling.

- Java

- Relevance: Considering the several NLP tools, Java plays an important role as well as being highly adaptable in legacy NLP models.

- Fundamental Libraries and tools:

- Stanford NLP: It is particularly applicable for NER (Name Entity Recognition), POS tagging and dependency parsing.

- Apache OpenNLP: For ML-based NLP programs, it acts as an effective toolkit.

- Mallet: Topic modeling toolbox.

- Ling Pipe: Especially for language analysis, Ling Pipe provides efficient toolboxes.

- MATLAB

- Relevance: In NLP projects, MATLAB is applied typically for signal processing and automatic language processing.

- Fundamental Libraries and tools:

- Text Analytics Toolbox: Effective toolbox for sentiment analysis, word embedding and text analysis.

- Deep Learning Toolbox: Deep learning models.

- C++

- Relevance: Through C++, high- throughput NLP application is generated.

- Fundamental Libraries and tools:

- FastText: Dynamic word depictions and categorization.

- Treetagger: Lemmatization and POS tagging.

- CoreNLP: C++ API is provided by Stanford’s NLP applications.

- Perl

- Relevance: In language processing and bioinformatics, Perl is conventionally adaptable.

- Fundamental Libraries and tools:

- Lingua: EN::Tagger: For English text, POS tagging is implemented.

- Lingua: EN::Fathom: Interpretability evaluation and text statistics.

NLP PhD Research Ideas

Read some of the interesting NLP PhD Research Ideas that are shared by matlabsimulation.com experts we are a huge team of professionals who operates in this field for more than 18+ years, we guide scholars on all research and development help that are tailored to their needs. Thesis writing are done flawlessly as per your academic norms so feel free to discuss with us all your doubts we provide top experts help.

- Semi-Supervised Natural Language Processing Approach for Fine-Grained Classification of Medical Reports

- Riemannian Adaptive Optimization Algorithm and its Application to Natural Language Processing

- Natural Language Processing Technique for Generation of SQL Queries Dynamically

- Anomaly Detection of System Logs Based on Natural Language Processing and Deep Learning

- Natural Language Processing Approach for Learning Process Analysis in a Bioinformatics Course

- TinyGenius: Intertwining Natural Language Processing with Microtask Crowdsourcing for Scholarly Knowledge Graph Creation

- Natural language processing to classify named entities of the Brazilian Union Official Diary

- Similarity Analysis of Patent Claims Using Natural Language Processing Techniques

- Multi-Models from Computer Vision to Natural Language Processing for Cheapfakes Detection

- Video Based Transcript Summarizer for Online Courses using Natural Language Processing

- Graph-Based Methods for Natural Language Processing and Understanding—A Survey and Analysis

- Visual Analysis and Mining of Knowledge Graph for Power Network Data Based on Natural Language Processing

- Natural Language Processing on Diverse Data Layers Through Microservice Architecture

- Constructing activity diagrams from Arabic user requirements using Natural Language Processing tool

- A Natural Language Processing Based Planetary Gearbox Fault Diagnosis with Acoustic Emission Signals

- Evaluation of a domain independent approach to natural language processing for game-like user interfaces

- Classification of Sentiments of the Roman Urdu Reviews of Daraz Products using Natural Language Processing Approach

- SecureNLP: A System for Multi-Party Privacy-Preserving Natural Language Processing

- Extracting Alternative Splicing Information from Captions and Abstracts Using Natural Language Processing

- Evaluation of Document-Level Identification of Pulmonary Nodules in Radiology Reports Using FLAIR Natural Language Processing Framework